Bringing Data Sources Together for ML Data Pipelines

20/05/2020 16:14:16 (GMT)

Machine Learning models learn from data

Machine Learning (ML) models are able to perform so well because of the underlying data that they have been trained on. Training is done using a dataset of observed results and the inputs at that point of observation. A major problem many of our clients face when starting out with Artificial Intelligence (AI) and ML is generating a training set as a starting point. This could be because their existing data is simply not in a format that is ready to be processed in such a way. However, they are the lucky ones as the solution to their problem often just involves pre-processing the data in a way that transforms it into a dataset ready for ML. The real challenge comes when the client would like to blend their existing data with third-party data, or are even approaching ML with a good idea but no data of their own to start with. In this article we will discuss what the central challenges are in such scenarios and also how it is possible to minimise the headache as much as possible, not only up front but also long term as the deployed ML system ingests and learns from new data going forward.

Third Party Data

Third party data can often be a useful addition to an existing dataset by providing context to inputs. Many of our clients ask us to collect and incorporate third-part data into their existing datasets for that reason. Sometimes a client will come to us with a great idea for a ML project but not have any of the data from which to make a start. Either way, it is then our job to find a way to legally obtain that data in an automated way so that new data can always be utilised in any outputs from the model and, also, for updating the model over time to ensure continued viability. That is where an application programming interface (API) comes in. APIs are commonly offered as a way to connect with third-party microservices in order to interact with that service. One of the capabilities that is usually offered as part of an API is a way to request the data from the service. This all sounds wonderful; however, APIs are retired and replaced. Inconsistencies, errors and issues can pile up because of changes or bad data entry on the side of the third party. So far, we have been focused on the case where we are collecting data from APIs as large data set collections. Usually such services are offered when the data has been processed or grouped for archive. Examples of these type of datasets are like many of the datasets offered by the UK Office for National Statistics.A major problem with this type of data can be that it is often packed with inconsistencies and errors as the datasets themselves are often retrospectively altered and added to by different sources.

If you have the time a good solution to this is to make use direct data streams that some APIs offer, for example the Twitter Streaming API. Unlike the majority of archived datasets these types of data streams often contain a higher quality of data as they tend to be utilised by mission critical systems. In the case of Twitter, their API is actually what their public facing platform is built on. However, you have probably noticed that plumbing this type of data collection directly into a data pipeline to train and run a ML model would result in a cold start problem. That is to say, it would take a long time to accumulate the amount of data needed to be of any use with ML. Furthermore, connection consistency can also play a part and result in data collection being interrupted and slowed down. Additionally, to reduce load on their servers third party APIs often come with a rate limit imposed. While this is a fair limitation, it contributes to slowing down data collection even more, especially if the data being requested is large in disk size. Approaching an ML solution with a direct connection to these services will likely mean that successfully training a ML model can take weeks or longer.

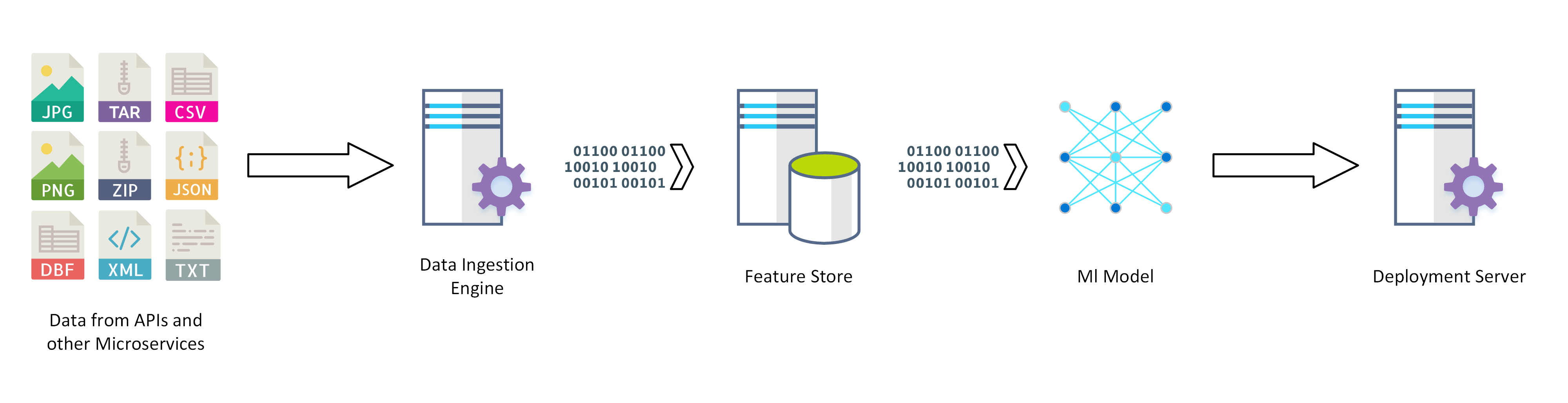

Feature Stores

AI solutions are a continuous system. This not only applies to the intelligence outputs that are created, but also to the on-going learning that occurs. This learning happens in iterations, where the ML models deployed are continually improved and updated, just as a human would learn from their experiences and exposure to new data. The faster these iterations happen then the faster the system can improve as a whole. If data is not readily available and in a reliable state it can take weeks for a team to put together new hypotheses and train a new model as they need to constantly rework data pipes just to get access to new data. The solution to this, and a good way of streaming AI system pipelines in general, is to make use of a Feature Store. Whilst not an entirely direct comparison a Feature can be thought of as, a row in a traditional table of data and a Feature Store creates a standard way for data scientists to define the Features that a system should use. There is a lot going on under the hood but essentially the job of the Feature Store is to handle generating training data by coordinating multiple Big Data systems to process new incoming data and past collected data from both third-party sources and internally. Merging all the data that we care about in this way eliminates the problems related to the AI system you want to build fetching the data in real-time. Instead, everything can be accessed in one round trip. Additionally, the Feature Store is fault resistant as they ensure data is available in a consistent and error free way for multiple AI systems to utilise.

While Feature Stores are a great way to gain ownership of your own Features, and decouple your system from that of either external or internal microservices, sadly some issues still remain. One flaw is that if an API schema changes it can incapacitate the Feature Store. Therefore, ingestion for APIs you have no control over should be handled with extreme care and automated monitoring be applied so that a fix to the way the Feature Store ingests the data can be deployed quickly. The upside is that this would allow time to resolve any issues before they may become an issue for the AI system that resides downstream from the Feature Store itself. Another consideration to take into account is the cost associated to pre-processing and storing large amounts of data into a Feature Store in the first place. The trade-off occurs in the value placed on reducing the complexity and time required to allow developers to get an updated version of an ML model trained and deployed. For these reasons, we often recommend such an approach to clients that either would require continuous improvement of their AI systems or if they intend to roll out multiple AI systems overtime. As the number of ML or Deep Learning models in an AI system grows, so does the value of a Feature Store.